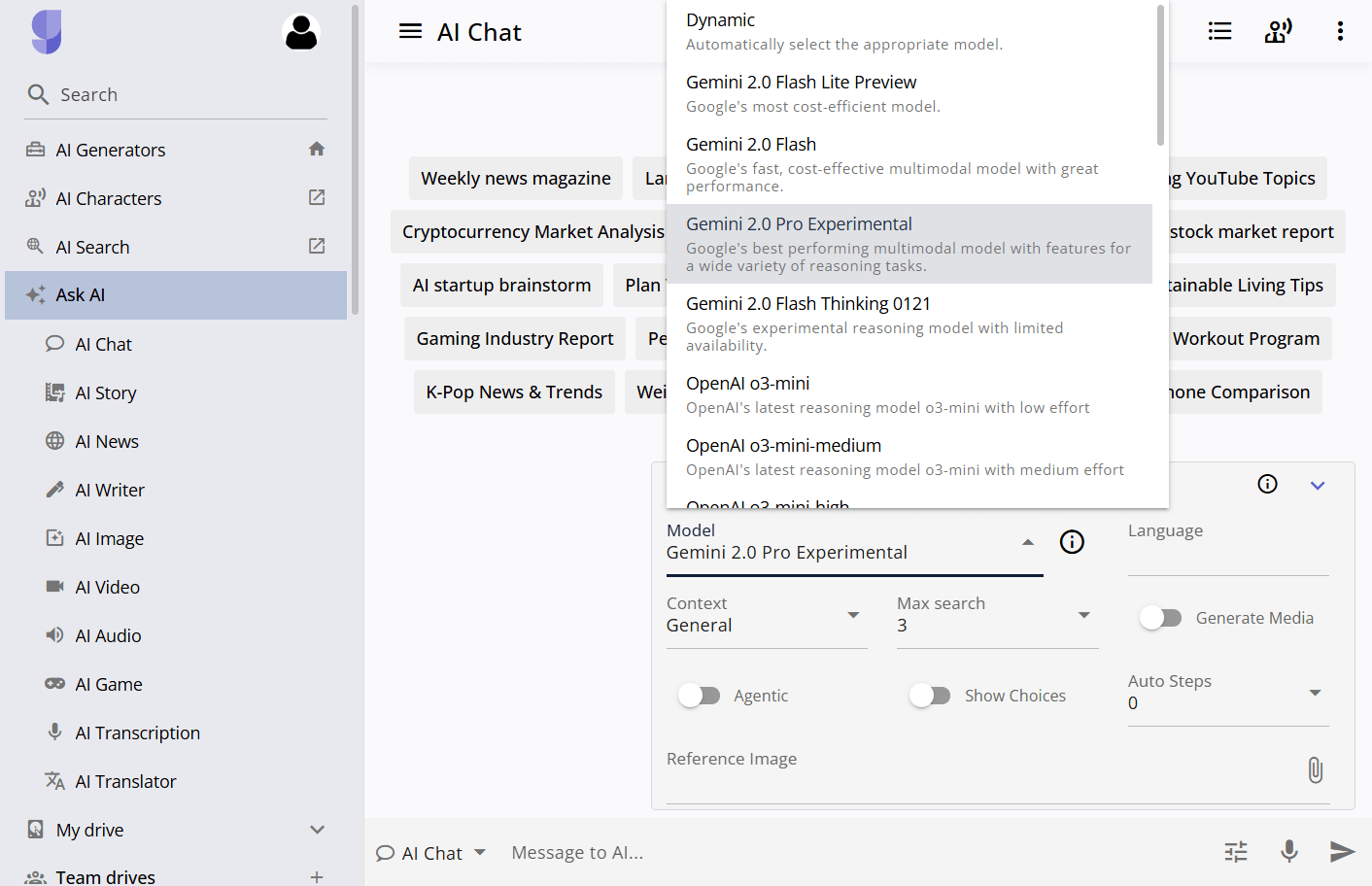

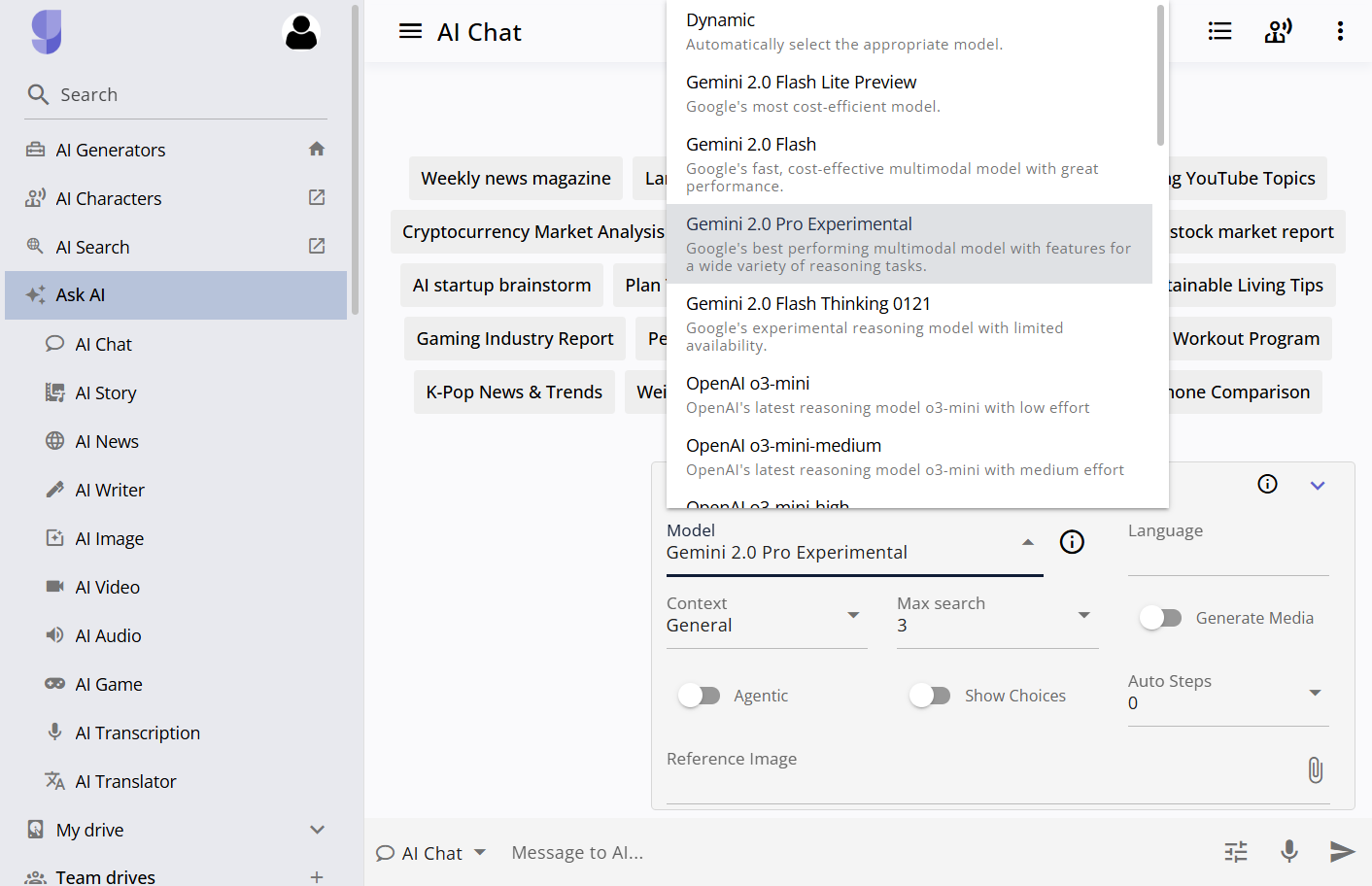

Why Use Gemini 2.0 on GizAI?

🚀 More Free Uses – GizAI provides free Gemini 2.0 access without login. See here for details.

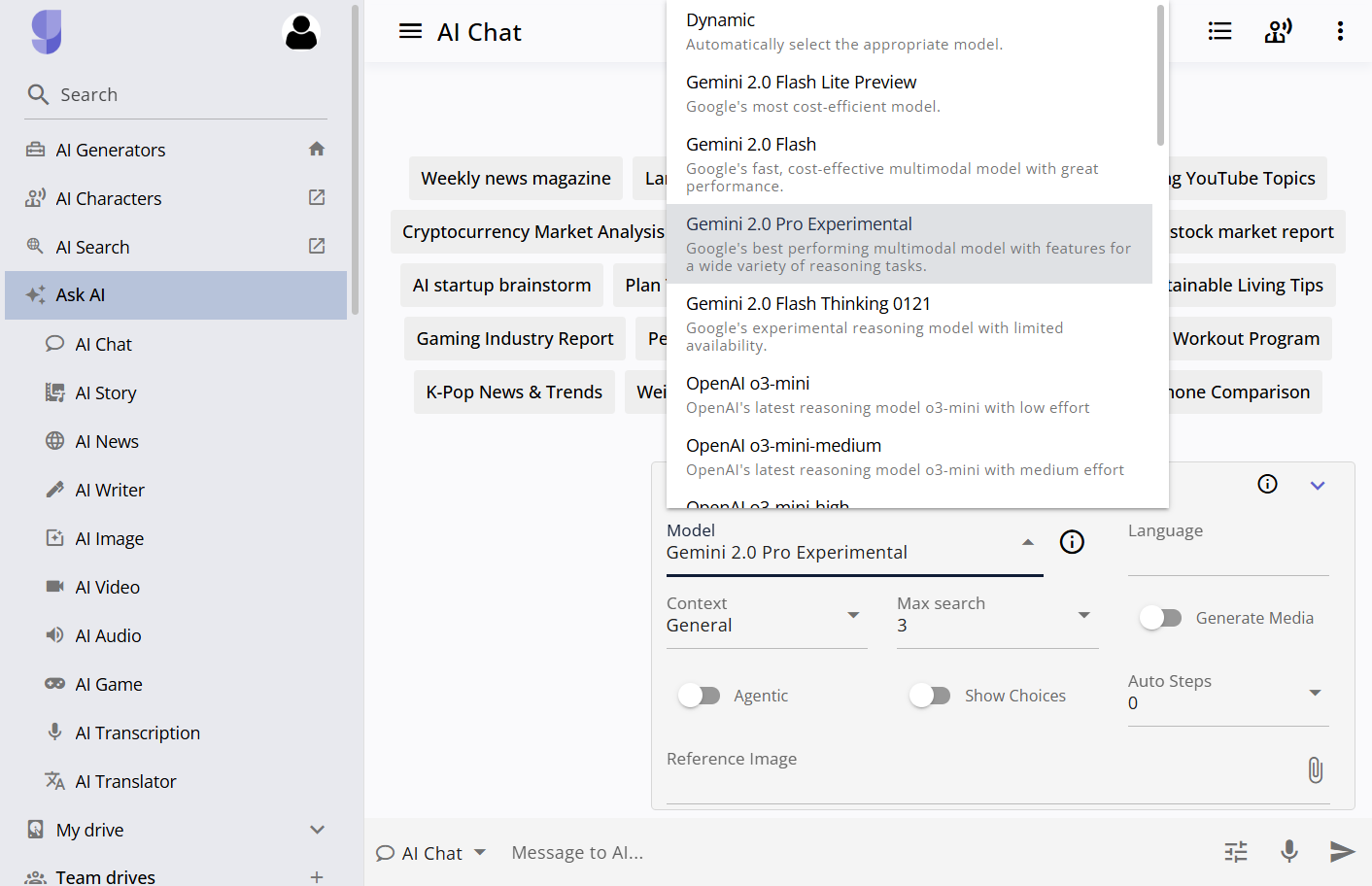

🔥 All Variants Available – Use Gemini 2.0 Flash, Gemini 2.0 Flash Lite, and Gemini 2.0 Pro without restrictions.

💡 More AI Models – Access o3-mini, DeepSeek R1, FLUX 1.1, SD 3.5, LTX Video, and more for free.

🔗 Get Started Now – No sign-up needed! Try it instantly 👉 Gemini 2.0 on GizAI.

Google Gemini 2.0

Google’s latest leap in artificial intelligence is here—introducing Gemini 2.0, an advanced family of AI models engineered to revolutionize developer tools, user experiences, and interactive applications. With Gemini 2.0, Google DeepMind has pushed the boundaries of efficiency, reasoning, and pure computational power, delivering a suite of models that cater to diverse needs ranging from coding assistance and complex reasoning to cost-efficient, high-speed multimedia processing. In this blog post, we explore the features, updates, and groundbreaking capabilities of Gemini 2.0, how it will affect AI development across industries, and why it is set to become a cornerstone technology in the era of agentic AI.

Google unveiled the new Gemini 2.0 Flash model to everyone on February 5, 2025, marking a significant milestone in making advanced AI accessible at scale for both developers and end users. With an extended set of functionalities that include Gemini 2.0 Flash, Gemini 2.0 Flash-Lite, and Gemini 2.0 Pro Experimental, this update redefines what is possible with modern AI applications.

“Gemini 2.0 is now available to everyone.”[^1]

In this article, we will cover the evolution of the Gemini series, detail the enhanced features of each model variant, and provide insights on how developers and enterprises can harness these capabilities through groundbreaking services like Google AI Studio and Vertex AI.

Introducing Gemini 2.0: A New Milestone in AI

Gemini 2.0 represents the culmination of years of research and development in deep learning and agentic AI. The new model family is designed for a range of tasks—from simple text generation to advanced reasoning in multimodal contexts. Google’s new approach leverages a multi-model architecture that makes it truly versatile and adaptive to diverse requirements.

Key Updates and Model Variants

Google has segmented the Gemini 2.0 family into three main product offerings:

-

Gemini 2.0 Flash

-

Gemini 2.0 Flash-Lite

-

Gemini 2.0 Pro Experimental

Each caters to specific needs while sharing common aspects such as a broad multimodal input capability and an impressive token processing window.

Gemini 2.0 Flash

Initially introduced at Google I/O 2024, the Flash series has long been favored by developers for its speed, reliability, and performance scalability. The updated Gemini 2.0 Flash model comes with:

-

High Efficiency and Low Latency: Optimized for real-time responses, making it ideal for high-frequency tasks at scale.

-

Multimodal Reasoning: Capable of integrating text, images, and soon-to-be-added audio modalities, demonstrating its ability to understand context over a massive 1 million token context window.

-

Enhanced Developer Access: Now available via the Gemini API in Google AI Studio and Vertex AI, allowing developers to build production-grade applications quickly and efficiently.

With these advancements, Gemini 2.0 Flash is set to power new interactive experiences across Google’s suite of products, including the widely used Gemini app for desktop and mobile.

Gemini 2.0 Flash-Lite

Cost-efficiency is a cornerstone of any large-scale AI deployment, and Gemini 2.0 Flash-Lite has been engineered to offer improved quality without a significant increase in resource consumption. The Flash-Lite variant stands out due to:

-

Economic Scalability: Designed with cost in mind, it outperforms its predecessor (1.5 Flash) while still providing speed and reliability at a lower operational cost.

-

Consistency Across Benchmarks: It delivers better performance on several key benchmarks, ensuring that even intensive applications remain cost-effective.

-

Broad Applicability: Ideal for applications that require high-volume processing of visual data, as evidenced by its ability to generate relevant one-line captions for tens of thousands of photos at minimal cost.

The introduction of Flash-Lite ensures that even start-ups and smaller enterprises with limited budgets can integrate robust AI solutions into their technology stacks.

Gemini 2.0 Pro Experimental

For users requiring the highest computational power and capacity, the Gemini 2.0 Pro Experimental model provides unmatched performance in:

-

Coding and Complex Prompts: Developed to handle advanced coding tasks, it is engineered for developers who need intricate code generation and debugging capabilities.

-

Expanded Context Understanding: Boasting a context window of up to 2 million tokens, it can analyze and understand vast datasets, enabling applications that require deep reasoning and analysis.

-

Tool-Calling Integration: The model has been enhanced with the ability to call external tools like Google Search and execute code, making it incredibly versatile for real-time applications.

This model is particularly tailored for high-end applications in software development, research, and creative industries, where the demand for precision and detailed insight is critical.

How Gemini 2.0 is Transforming Developer Ecosystems

Integration with Google AI Studio and Vertex AI

With the release of Gemini 2.0, Google has strategically expanded access to its cutting-edge AI infrastructure. The updated models are integrated into both Google AI Studio and Vertex AI.

-

Google AI Studio: This environment provides developers with a comprehensive toolkit to design, test, and deploy AI applications using Gemini 2.0. The streamlined interface and powerful debugging tools ensure that developers can quickly iterate and improve their models.

-

Vertex AI: Google’s Vertex AI is a managed machine learning platform that simplifies the deployment of production-grade ML models. With Gemini 2.0, users can expect seamless integration into their existing workflows and a significant boost in performance and security measures.

By making these models accessible on such widely adopted platforms, Google ensures that both large enterprises and independent developers can build robust, future-proof applications without having to worry about infrastructure logistics.

Powering the Next Generation of AI Applications

Gemini 2.0 is not just an incremental update—it is designed to serve as the foundation for the next generation of AI initiatives, including:

-

Virtual Assistants and Chatbots: Enhanced reasoning capabilities and multimodal support mean that virtual assistants can now offer more nuanced responses. They have increased sensitivity to context and can explain their reasoning steps, leading to more transparent interactions.

-

Creative Content Generation: Writers, marketers, and creative professionals can leverage the powerful text and image recognition capabilities of Gemini 2.0 to generate high-quality content. Its ability to handle large amounts of data enables detailed research assistance and idea generation.

-

Coding and Debugging Support: For developers, the Gemini 2.0 Pro Experimental model offers sophisticated code generation and debugging tools. This means faster turnaround times and more efficient problem-solving in software development cycles.

-

Advanced Analytics and Research: In scientific and business research, accessing and analyzing large datasets quickly is crucial. The expanded token capacities and improved reasoning of Gemini 2.0 facilitate comprehensive data analysis, opening new horizons in research capabilities.

Google’s approach with Gemini 2.0 demonstrates a commitment to enabling safe, efficient, and innovative AI integrations that respond to both societal needs and developer demands.

Enhanced Performance across Benchmarks

The Gemini 2.0 family has been rigorously benchmarked to ensure that it meets the high expectations set by its predecessors. Key performance improvements include:

Expanded Context Windows

One of the most significant advances in the Gemini 2.0 lineup is the expanded context windows available in various models:

-

Flash and Flash-Lite: These models are designed with a 1 million token context window, allowing comprehensive analysis of large text and multimedia datasets.

-

Pro Experimental: With a staggering 2 million token context window, this variant is tailored for applications requiring detailed contextual understanding over extremely large bodies of text and data.

An expanded context window allows the models to perform more precise reasoning by considering a broader swath of input data, which is particularly useful in dynamic applications such as real-time communication and extensive data analytics.

Multimodal Capabilities

Gemini 2.0 continues to push the envelope with its multimodal input and output capabilities. While the current release focuses primarily on text-based responses enhanced with image recognition, further modalities like audio translation and video analysis are on the horizon. These improvements pave the way for:

-

Seamless User Experiences: Enabling users to interact with AI systems in a natural and integrated manner. Whether it’s voice commands or image-based searches, the system can seamlessly switch between modalities.

-

Enhanced Problem-Solving: By integrating multiple types of data, the AI can offer more comprehensive solutions—perfect for applications where context shifts rapidly between text, images, and other media types.

Improved Benchmark Scores

Google has compared the performance of Gemini 2.0’s variants to previous offerings and other contemporary models. In key areas such as general knowledge, code generation, reasoning, factuality, multilingual understanding, and long-context comprehension, each variant of Gemini 2.0 has showcased substantial improvements. The benchmarks indicate:

-

Increased Efficiency: Developers and users will notice a significant improvement in response times and overall system efficiency.

-

Enhanced Task Accuracy: Better handling of complex prompts and an improved ability to call and integrate external tools contribute to enhanced task accuracy.

-

Cost-Effective Solutions: Particularly with the release of Gemini 2.0 Flash-Lite, users benefit from a model that offers top-tier performance at a fraction of the cost of previous models.

These advancements are not mere incremental updates; they represent a paradigm shift in what modern AI systems are capable of achieving.

Robust Safety and Responsibility Measures

With great power comes great responsibility. Google is well aware of the potential risks that come with sophisticated AI models such as Gemini 2.0. The company has implemented several robust safety measures to ensure that the deployment of these models remains secure and responsible.

Reinforcement Learning-Based Safety

A key component of the Gemini 2.0 update is the integration of advanced reinforcement learning techniques into the reinforcement learning from human feedback (RLHF) process. This system uses Gemini itself to critique its responses and identify potential issues proactively. By leveraging these in-model feedback loops, Google has achieved:

-

Improved Accuracy: The models can now self-critique and refine their outputs, leading to responses that are more accurate and contextually relevant.

-

Enhanced Sensitivity Handling: Advanced techniques ensure that the models better understand and handle sensitive or controversial topics, reducing the risk of misuse.

Automated Red Teaming

In light of the rising concerns about cybersecurity risks in AI, Google employs automated red teaming techniques. This process involves simulating potential cybersecurity breaches, such as indirect prompt injection attacks, to assess vulnerabilities preemptively. The proactive approach to addressing security risks helps to ensure that:

-

Data Security is Maintained: The models operate safely within controlled parameters.

-

Potential Exploits are Mitigated: Automated red teaming reduces the possibility of potential attackers exploiting weaknesses in the system’s design.

Commitment to Ethical AI

Google’s commitment to ethical AI is evident in the design and release of Gemini 2.0. The company continues to invest in research and development to ensure that its AI models operate within well-defined ethical frameworks. This commitment is aligned with global standards for responsible AI, ensuring that:

-

Transparency is Prioritized: Users can trust the system knowing that safety measures are state-of-the-art.

-

Inclusive Policy Development: The new models incorporate feedback from diverse user communities and ethical boards, ensuring a broad consensus on responsible usage standards.

By combining reinforcement learning, automated red teaming, and a firm commitment to ethical practices, Google is positioning Gemini 2.0 as a safe, secure, and user-friendly model that meets the demands of modern AI applications.

Real-World Applications and Industry Impact

The release of Gemini 2.0 stands to significantly impact various industries. From everyday consumer applications to high-end enterprise solutions, the enhanced capabilities of Gemini 2.0 offer far-reaching benefits.

Virtual Assistants and Chatbots

Virtual assistants have become indispensable tools in modern society. Gemini 2.0’s ability to process and reason about large datasets in real time means that virtual assistants can now provide:

-

More Nuanced Interactions: Enhanced reasoning allows the AI to explain its suggestions, making interactions more transparent.

-

Higher Reliability: With improved contextual understanding, chatbots can handle complex multi-turn conversations with less risk of misunderstanding the user’s intent.

-

Faster Response Times: Low latency ensures that users can expect near-instantaneous feedback, essential for real-time applications like customer support.

This transformation means that customer service applications, online banking support, and even personal digital assistants will be more efficient, reliable, and empathetic.

Coding and Software Development

For developers, Gemini 2.0 Pro Experimental offers groundbreaking improvements in coding assistance:

-

Rapid Code Generation: The increased coding capabilities enable the AI to generate code snippets, debug issues, and even recommend architectural changes with minimal input.

-

Enhanced Complex Task Handling: With a context window extending to 2 million tokens, the model can manage complex programming tasks that require an in-depth understanding of large codebases.

-

Tool Integration: The ability to call external tools such as Google Search and code execution environments ensures that developers can integrate Gemini 2.0 seamlessly into their existing workflows.

The impact of these features is profound, as they reduce development cycles, eliminate many repetitive coding tasks, and empower software engineers to focus on creative problem solving.

Creative Content Generation and Media

In the realm of creative industries, Gemini 2.0 is set to transform how content is produced and curated:

-

Automated Content Creation: Marketers and writers can leverage the multimodal capabilities of Gemini 2.0 to generate high-quality content, from articles and social media posts to visual media enhancements.

-

Dynamic Visual Media Processing: With the ability to process images and soon-to-be-integrated audio and video, Gemini 2.0 will provide creative tools that automatically edit, caption, and optimize multimedia content.

-

Enhanced Research Capabilities: The model’s capacity to handle extensive datasets means that content creators can produce research-based reports that are data-rich and insightful.

These improvements streamline the content creation process, empower marketing teams to act quickly on trends, and reduce the time required to produce high-quality media assets.

Data Analytics and Research

Organizations that rely on in-depth data analysis will find Gemini 2.0 invaluable:

-

Comprehensive Data Synthesis: The expanded context window allows for thorough examination and synthesis of large volumes of textual and visual data.

-

Predictive Analytics: With improved reasoning, the Gemini 2.0 models aid in forecasting trends and patterns, leading to better-informed business and research decisions.

-

Enhanced Reporting: The ability to generate detailed, natural-language reports from complex datasets means that stakeholders receive actionable insights without the need for extensive manual analysis.

By automating and enhancing sophisticated data tasks, Gemini 2.0 is poised to become an essential tool for industries ranging from finance and healthcare to scientific research and public policy.

Leveraging Gemini 2.0: How You Can Get Started

For developers and enterprises eager to explore the Gemini 2.0 ecosystem, Google provides comprehensive platforms and documentation to facilitate a smooth integration process.

Getting Started with Google AI Studio

Google AI Studio is the primary platform for interacting with Gemini 2.0 models. The studio offers a user-friendly interface where developers can:

-

Experiment with different Gemini 2.0 variants.

-

Integrate the models into existing applications.

-

Monitor performance metrics and optimize model responses in real time.

The seamless access to the models via AI Studio means that developers can quickly prototype and launch their AI-driven applications with little downtime.

Deploying on Vertex AI

Vertex AI complements the AI Studio by offering production-grade deployment options. It allows users to:

-

Scale their AI applications reliably.

-

Manage model deployments with advanced security and monitoring tools.

-

Integrate with other Google Cloud services to build end-to-end solutions across diverse industries.

The integration of Gemini 2.0 in Vertex AI means that organizations can deploy powerful AI solutions with confidence, knowing that they are backed by Google’s robust cloud infrastructure.

Community and Ecosystem Support

Google continues to foster a vibrant ecosystem around its AI innovations. Developers can engage with a rich community to share insights, offer feedback, and collaborate on projects leveraging Gemini 2.0. Regular updates, detailed documentation, and hands-on tutorials ensure that users always have access to the latest advancements and best practices.

By participating in the extensive community support channels, developers and enterprises alike can stay ahead of the innovation curve, learn from peer experiences, and make the most of what Gemini 2.0 has to offer.

The Future of AI with Gemini 2.0

The Gemini 2.0 family of models is not a static product—it is a living, evolving suite of technologies that signals the dawn of an era where AI is more accessible, more powerful, and more integrated into every aspect of our daily lives.

Continuous Improvements and Updates

Google’s commitment to continuous improvement is evident from its scheduled updates, such as the expected integration of additional modalities like audio and video analysis in future releases. This forward-thinking approach ensures that Gemini 2.0 remains at the forefront of AI innovation.

Expanding Use Cases

As more developers and enterprises integrate Gemini 2.0 into their products and services, new use cases are anticipated to emerge. Innovations in personalized healthcare, autonomous vehicles, interactive gaming, and smart home devices are just a few domains where Gemini 2.0 could spark groundbreaking advancements.

Ethical and Responsible AI

Google’s robust safety protocols and commitment to responsible AI use set a solid foundation for future developments. As ethical concerns in AI continue to be a focal point globally, Gemini 2.0 is designed with the flexibility to adapt to new regulatory requirements and societal expectations.

This model’s ability to self-critique and update its safety measures in real time means that it is well-prepared to meet the evolving challenges of AI ethics, making it not just a technological marvel but also a socially responsible one.

Global Impact and Democratization of AI

The democratization of high-performance AI technology is at the core of Google’s vision for Gemini 2.0. Making these models readily available through platforms like Google AI Studio and Vertex AI means that innovation is no longer confined to tech giants alone. Start-ups, academic institutions, and independent developers now have access to state-of-the-art AI technology, which can drive economic growth, creative endeavors, and scientific breakthroughs on a global scale.

With Gemini 2.0, Google is catalyzing a future where AI is accessible to all, paving the way for a more interconnected and intelligent world.

Frequently Asked Questions (FAQ)

Q1: What is Gemini 2.0 and how is it different from previous versions?

A1: Gemini 2.0 is Google’s latest family of AI models that includes variants like Gemini 2.0 Flash, Flash-Lite, and Pro Experimental. It features expanded context windows, improved multimodal capabilities, enhanced reasoning, and cost-effective options while offering far more robust performance for diverse tasks compared to previous versions[^1][^2].

Q2: What are the main differences between Gemini 2.0 Flash, Flash-Lite, and Pro Experimental?

A2:

• Gemini 2.0 Flash is optimized for speed, high efficiency, and real-time interactions, making it a versatile workhorse model.

• Gemini 2.0 Flash-Lite focuses on cost-efficiency and delivering high-quality performance at lower expenses, ideal for high-volume processing of visual data.

• Gemini 2.0 Pro Experimental is tailored for complex tasks such as coding and deep reasoning, featuring an expanded context window of 2 million tokens and the ability to integrate external tools for enhanced performance[^1][^3].

Q3: How can developers access Gemini 2.0 models?

A3: Developers can access the Gemini 2.0 models through Google AI Studio and Vertex AI. These platforms provide robust environments for development, testing, and deployment, ensuring that companies can easily integrate these models into their production environments[^1][^3].

Q4: What safety measures does Google implement with Gemini 2.0?

A4: Google has implemented multiple safety measures, including advanced reinforcement learning techniques that allow the models to self-critique and refine outputs, along with automated red teaming to safeguard against cybersecurity attacks like indirect prompt injection. These measures ensure responsible use and continuous adherence to security protocols[^1][^3].

Q5: What industries are expected to benefit most from Gemini 2.0?

A5: Gemini 2.0 is expected to benefit a range of industries including customer service (through enhanced virtual assistants and chatbots), software development (via advanced code generation tools), creative media and content generation, data analytics, healthcare, and research. Its versatility and robust performance make it a powerful asset across multiple sectors[^1][^3].

Q6: Will there be future updates to Gemini 2.0?

A6: Yes, Google has committed to continuous improvements with Gemini 2.0. Future updates are expected to include additional modalities, enhanced reasoning capabilities, and further optimizations in performance and safety, ensuring that the model remains at the forefront of AI technology and innovation[^1].

Conclusion

Google’s Gemini 2.0 is a milestone achievement in the evolution of artificial intelligence. Combining high-speed performance with deep, multimodal reasoning capabilities, the Gemini 2.0 family is set to empower developers, businesses, and consumers alike. Whether you are looking to build advanced chatbots, implement cutting-edge coding solutions, or create dynamic content at scale, Gemini 2.0 offers a robust, flexible, and future-proof solution.

The integration of Gemini 2.0 into platforms such as Google AI Studio and Vertex AI signals a democratization of high-performance AI technologies, allowing innovators from all backgrounds to harness its power. Moreover, Google’s unwavering commitment to safety and responsible AI development ensures that these groundbreaking models are not only incredibly effective but also ethically and securely deployed.

As we look toward the future, Gemini 2.0 is poised to redefine industry standards and inspire innovation far beyond today’s technological limitations. With constant improvements on the horizon and robust community support, Gemini 2.0 will undoubtedly play a crucial role in shaping the next era of AI-driven solutions.

By embracing the new Gemini 2.0 models, developers and enterprises can capitalize on enhanced efficiency, unprecedented scale, and transformative capabilities—ushering in a new era of intelligent applications that will shape the global landscape in the coming years.

References

[^1]: Google Blog. (2025, February 05). Gemini 2.0 is now available to everyone. Retrieved from https://blog.google/technology/google-deepmind/gemini-model-updates-february-2025/

[^2]: AL24 News. (2025, February 04). Google Officially Launches Its New AI Model: Gemini 2.0 Flash. Retrieved from https://al24news.com/en/google-officially-launches-its-new-ai-model-gemini-2-0-flash/

[^3]: TechCrunch. (2025, February 05). Google launches new AI models and brings ‘thinking’ to Gemini. Retrieved from https://techcrunch.com/2025/02/05/google-launches-new-ai-models-and-brings-thinking-to-gemini/

By leveraging Gemini 2.0, the future of interactive, responsive, and efficient AI applications is here. Embrace this innovative technology to transform your business processes, enhance user experiences, and drive unprecedented growth in an increasingly digital world. Enjoy exploring the endless possibilities with Google Gemini 2.0!